The world of robotics and artificial intelligence (AI) has long captivated the human imagination. From science fiction films to cutting-edge research labs, the idea of machines capable of acting completely independently—without human intervention or oversight—has sparked both excitement and apprehension. Will robots truly achieve autonomy, or will they always need human oversight? This question has profound implications for industries ranging from manufacturing and healthcare to transportation and even space exploration.

This article delves into the concept of robotic autonomy, exploring the technology behind it, the barriers to achieving true autonomy, and the ongoing need for human oversight. By the end, we will have a deeper understanding of whether robots can truly operate without human intervention, or whether their role will always be that of a tool under human control.

The Current State of Robotics

Robots are already integrated into numerous industries, performing tasks ranging from simple repetitive actions to more complex operations requiring problem-solving capabilities. Some of the most well-known applications of robots include:

- Manufacturing: Robots have long been used in assembly lines, performing repetitive tasks like welding, painting, and assembly of parts. These robots are highly efficient but operate within strict parameters and are typically not capable of decision-making beyond their programmed tasks.

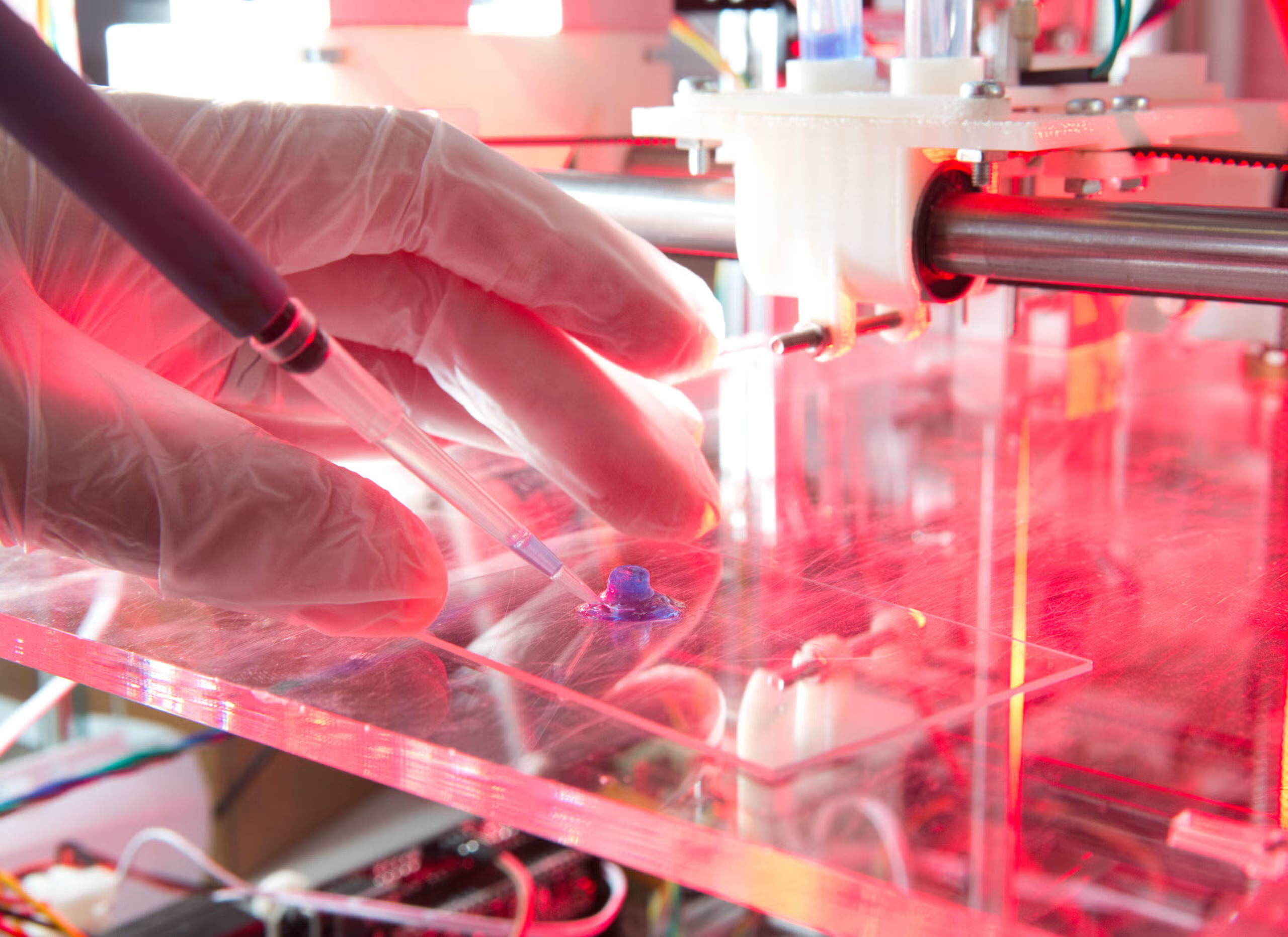

- Healthcare: Surgical robots like the da Vinci system offer precision in surgeries that require a high degree of accuracy. Though these robots aid surgeons, they still require human guidance and oversight throughout the procedure.

- Autonomous Vehicles: Self-driving cars are among the most high-profile examples of robots aimed at achieving autonomy. These vehicles use sensors, cameras, and machine learning algorithms to navigate and make decisions in real-time. While they can drive without human input in certain environments, they still require human oversight, especially in complex or unpredictable situations.

- Robotic Assistants: Robots like Boston Dynamics’ Spot are designed to perform a variety of tasks, from inspecting hazardous environments to helping with logistics. Though impressive, these robots are still not fully autonomous in the sense that they rely on human input for more complex decision-making.

While these examples highlight the impressive capabilities of robots, they also reveal the significant limitations they face in achieving true autonomy. Most robots today are only capable of performing tasks within predefined rules and environments. They can automate specific jobs, but their range of actions and decision-making abilities remain limited.

Defining True Autonomy

Before examining whether robots can achieve true autonomy, it’s important to define what we mean by the term. Autonomy, in the context of robotics, refers to the ability of a machine to perform tasks without human intervention, making decisions based on its environment and objectives, and adapting to new situations as they arise. True autonomy would imply that a robot could operate completely independently, without needing constant human guidance, adjustments, or oversight.

This level of autonomy involves several key characteristics:

- Decision-Making Capacity: The robot must be capable of processing information from its environment and making decisions based on that data. This requires advanced AI, often using machine learning techniques, to enable the robot to “learn” from its experiences.

- Adaptability: True autonomy demands that a robot can handle unpredictable or novel situations. Unlike current robots that perform well in structured environments, autonomous robots must be able to adapt to new, unforeseen circumstances.

- Goal-Oriented Behavior: The robot must understand its objectives and be able to pursue them without needing continuous input from a human operator. For example, an autonomous delivery drone must be able to navigate obstacles, avoid hazards, and choose the best route to deliver packages.

- Ethical and Safety Considerations: A truly autonomous robot must make ethical decisions in complex, real-world situations. For example, an autonomous car would need to make split-second decisions in emergency scenarios, such as choosing between swerving to avoid an obstacle or staying on its course to minimize harm.

Given these criteria, achieving true autonomy is a monumental challenge. Let’s explore the technological barriers standing in the way.

The Technological Barriers to True Autonomy

1. Complexity of the Environment

One of the major challenges in creating autonomous robots is the complexity of the real world. Environments are dynamic, unpredictable, and often chaotic. A robot may have sensors that provide it with a wealth of data, but interpreting that data accurately in real-time is a huge task.

For example, consider an autonomous car navigating through a busy city. It must account for pedestrians, cyclists, other vehicles, traffic signals, road conditions, and countless other variables. In some situations, even advanced AI might struggle to interpret the best course of action, especially in environments with rapidly changing variables.

While AI has made great strides in fields like computer vision and object recognition, it still struggles with understanding context in the way that humans do. Humans can quickly assess situations that require emotional or ethical decision-making, something that current AI is far from mastering.

2. Ethical Decision-Making

Ethical decision-making presents another significant barrier. As mentioned earlier, an autonomous robot, especially one in a high-risk environment like a self-driving car or medical robot, must be able to make decisions that are not only technically correct but also morally justifiable.

The “trolley problem,” a well-known ethical thought experiment, illustrates the difficulty robots face in making moral decisions. Imagine an autonomous car must choose between swerving and hitting a pedestrian or staying its course and injuring the driver. How should it decide? Current AI systems are not equipped to handle these types of decisions in a human-like way, and building an ethical framework that all societies agree upon remains a complex challenge.

3. Machine Learning Limitations

AI and machine learning (ML) are the backbone of autonomous systems. Through training on vast datasets, ML algorithms allow robots to recognize patterns and make decisions based on new input. However, machine learning has limitations in its ability to generalize beyond what it has been trained on.

For example, a self-driving car trained on roads in one region may not perform well in a different environment, with different weather conditions or driving norms. Although reinforcement learning, a branch of ML, allows for more adaptive learning, robots still face significant challenges when it comes to transferring their learned knowledge to entirely new situations.

4. Hardware Limitations

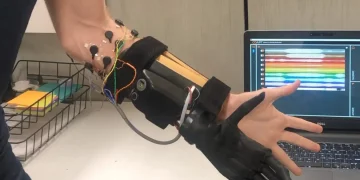

Robots rely on sensors, actuators, and other hardware to interact with their environment. While advances in robotics have led to improved sensors, these devices are still not perfect. Sensors can be affected by weather conditions like fog, rain, or snow, and they can be confused by objects with similar shapes or appearances. Similarly, robotic actuators—used for movement and interaction with the world—must be precise and durable to handle the complexities of various tasks.

At the moment, robots lack the sensory and motor precision that humans take for granted. For instance, robots may struggle with tasks that require dexterity, such as picking up fragile objects, or tasks that require a deep understanding of spatial relationships, like navigating through crowded areas.

The Case for Human Oversight

Despite the technological challenges, many experts believe that robots will always require some degree of human oversight, at least for the foreseeable future. There are several reasons for this:

1. Human Judgment

As mentioned earlier, robots still struggle with making decisions that require human-like judgment, especially in ethical or ambiguous situations. While AI can be trained to recognize patterns, it lacks the ability to apply common sense or intuition in the way humans can.

In healthcare, for example, robotic systems can assist doctors in surgeries, but the final decision often rests with the human practitioner. A robot may suggest a course of action, but a human doctor must weigh other factors, such as the patient’s history, preferences, and potential risks, before making a decision.

2. Accountability

In autonomous systems, accountability becomes a critical issue. If a robot makes a mistake that causes harm, who is responsible? Is it the manufacturer, the developer, or the robot itself? Until robots can explain their reasoning with the same clarity as humans, we will likely need human oversight to ensure that robots are held accountable for their actions.

3. Safety and Security

The potential for robots to be hacked or manipulated also raises concerns. As robots become more integrated into critical infrastructure like power grids or transportation systems, the risk of cyber-attacks increases. Human oversight can provide a layer of security, ensuring that robots behave as expected and are not used maliciously.

4. Trust Issues

Despite the advancements in AI, public trust in autonomous systems remains a barrier. People are understandably wary of robots making critical decisions without human intervention, particularly in situations where lives are at stake. Human oversight ensures that there is someone who can step in when things go wrong, even if the technology is capable of acting on its own.

The Future of Autonomy in Robotics

While achieving true autonomy remains a distant goal, the future of robotics is certainly promising. Advances in AI, machine learning, sensor technology, and robotics are steadily improving, making robots more capable and reliable. In certain controlled environments, such as factory floors or warehouses, robots are already achieving a high degree of autonomy.

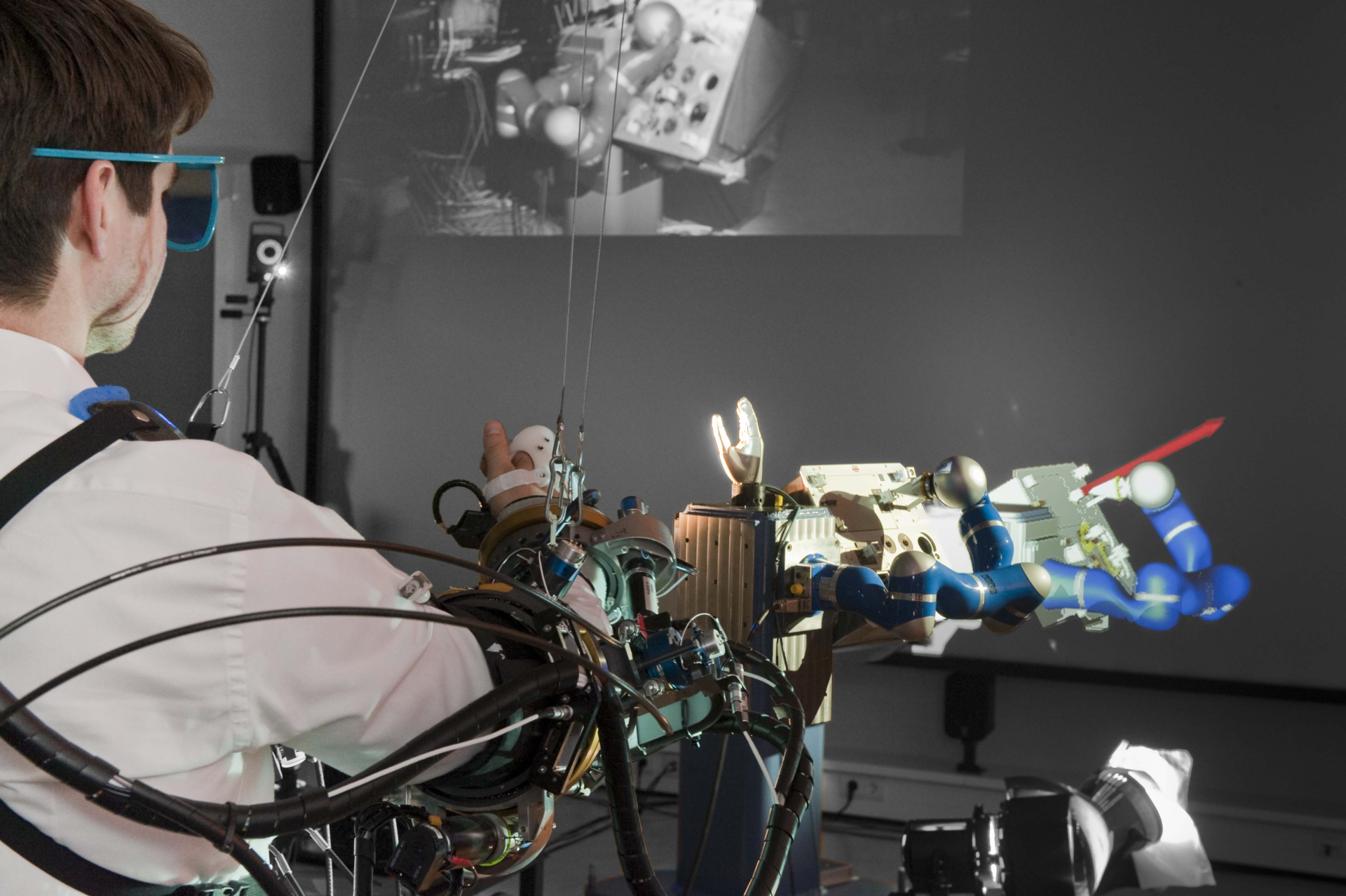

In the future, we may see robots that can operate independently in specific tasks, but it’s unlikely that they will ever be completely autonomous in all situations. Instead, the most likely scenario is a hybrid model where robots perform tasks independently but under human oversight. This could take the form of “collaborative robots” (cobots) that work alongside humans, leveraging both human judgment and robotic efficiency.

In high-stakes environments, such as healthcare or transportation, it is likely that human oversight will remain a critical component, ensuring that robots can act autonomously but with the safety net of human expertise and ethical considerations.

Conclusion

The journey toward true robotic autonomy is an exciting one, filled with both challenges and potential. While robots are becoming increasingly capable of performing tasks independently, achieving true autonomy—where machines can make decisions and adapt to unpredictable environments—is still a distant goal. For now, and likely for the foreseeable future, robots will continue to rely on human oversight, ensuring that they act safely, ethically, and in accordance with human values.

As technology advances, the role of humans in robotics may shift, but it is clear that humans will continue to play a crucial role in the development and oversight of autonomous systems. The future of robotics will likely be one of collaboration rather than total independence, as robots and humans work together to achieve common goals.