Introduction: The Rise of Ethical Robotics

As technology rapidly advances, one of the most profound questions emerging from the intersection of artificial intelligence (AI) and robotics is whether machines could one day make ethical decisions. We are already familiar with robots performing tasks like assembling cars, delivering packages, or even assisting in medical surgeries. However, the introduction of ethical decision-making presents a whole new level of complexity. Could we trust robots to make moral judgments that align with human values, or would this lead to unforeseen consequences?

This article explores the potential, challenges, and implications of robots making ethical decisions, focusing on AI’s role in shaping the future of humanity and society.

1. What is Ethical Decision-Making?

At its core, ethical decision-making refers to the process of choosing between options that are right and wrong, just and unjust. Human beings make ethical decisions based on personal values, societal norms, and a sense of empathy and justice. These decisions are influenced by emotional intelligence, past experiences, cultural beliefs, and sometimes even religious teachings.

For example, deciding whether to tell the truth in a difficult situation or deciding how to balance profit with environmental sustainability involves ethical reasoning. But what happens when a machine, devoid of emotions and cultural background, is tasked with making a similar decision?

2. The Concept of Ethical Robots

Robots with ethical decision-making capabilities would need an underlying framework that guides their actions and judgments. This could be developed using a variety of programming techniques, such as:

- Machine Learning: Robots can be trained on vast datasets containing examples of ethical dilemmas and human responses to those situations.

- Reinforcement Learning: A system where robots learn through trial and error, receiving rewards or penalties based on whether their actions align with ethical guidelines.

- Rule-Based Systems: Robots could follow pre-programmed ethical principles, much like Asimov’s “Three Laws of Robotics,” which aim to ensure that robots serve humans without causing harm.

However, a key challenge is that ethics itself is subjective and often context-dependent. What one person views as ethical may be considered unethical by someone else. So, for robots to make ethical decisions, they would need a flexible yet consistent decision-making process.

3. Could Robots Truly Make Ethical Decisions?

The question of whether robots could genuinely make ethical decisions hinges on several factors, including the nature of AI itself and the intricacies of moral philosophy. There are two main schools of thought on this issue:

- The Optimistic View: Advocates of this perspective argue that AI and robots could eventually develop the ability to make moral decisions through advanced algorithms. They believe that with enough data, machines could mimic human ethical reasoning and become reliable decision-makers in areas like healthcare, law enforcement, and even warfare.

- The Skeptical View: Critics, on the other hand, argue that ethical decision-making requires more than just logical analysis. It requires a deep understanding of emotions, human values, and social context—things that robots lack. Machines might make decisions based solely on calculations, without any real understanding of human suffering, happiness, or social consequences. This could result in ethically questionable decisions, even if the machine’s programming appears flawless.

4. Ethical Frameworks for Robots: Can Machines Understand Morality?

Ethical frameworks, such as utilitarianism, deontology, and virtue ethics, form the foundation of moral decision-making in humans. Can these be applied to machines?

- Utilitarianism: This ethical theory suggests that the best action is the one that maximizes happiness or well-being for the greatest number of people. A robot following a utilitarian approach might make decisions that prioritize overall societal benefits over individual rights. While this sounds appealing in theory, the robot might overlook minorities’ needs or fail to account for long-term consequences.

- Deontology: Deontologists believe that actions should follow certain moral rules or duties, regardless of the outcomes. A robot with a deontological approach would follow strict rules, even if the consequences aren’t ideal. For example, it might never lie, even if lying would save lives, as it considers the act of lying inherently wrong.

- Virtue Ethics: This approach emphasizes the character and moral virtues of the decision-maker rather than the rules or outcomes. A robot based on virtue ethics would be programmed to make decisions that reflect virtues like honesty, courage, and compassion. However, this approach is difficult to implement in machines since it requires a deep understanding of human emotions and behaviors.

5. Real-World Applications of Ethical Robots

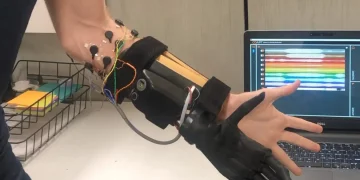

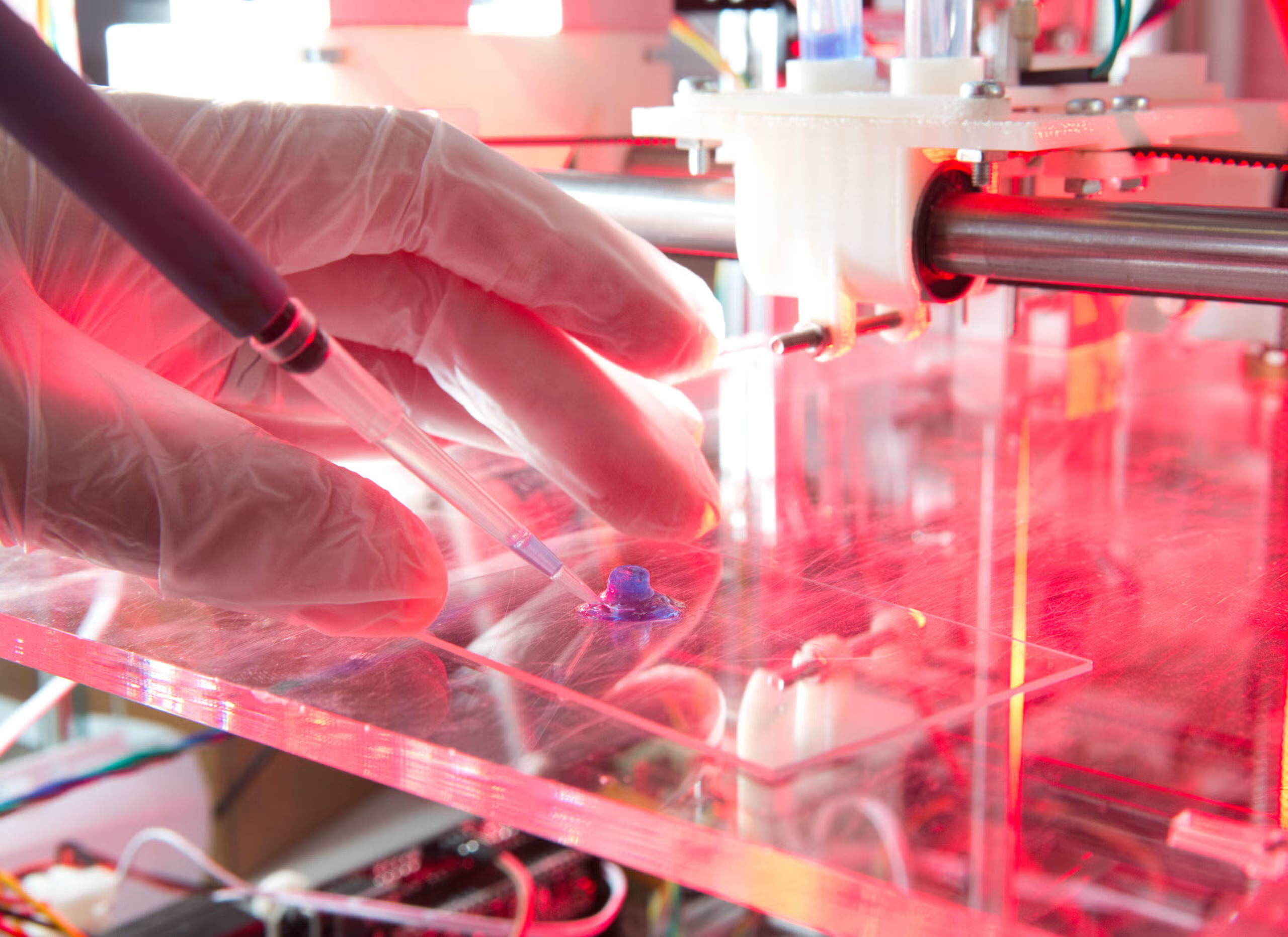

While the concept of robots making ethical decisions may seem futuristic, there are already real-world scenarios where AI is being tasked with ethical choices:

- Autonomous Vehicles: Self-driving cars must make split-second decisions when faced with potential accidents. Should a car prioritize the safety of its passengers, or should it swerve to avoid hitting a pedestrian, even if this means risking the lives of those inside? This dilemma, known as the “trolley problem,” is a prime example of how ethical considerations are now being integrated into robotic design.

- Healthcare AI: In medicine, AI systems are increasingly being used to diagnose diseases, recommend treatments, and even assist in surgery. These systems often need to make ethical decisions, such as when to withhold treatment or how to allocate scarce resources in times of crisis (e.g., during a pandemic). Ethical AI could ensure that decisions are made based on patient welfare rather than profit motives.

- Military Robots: Drones and autonomous weapons systems are becoming more advanced, and there is growing concern about their use in warfare. Should a robot be programmed to make life-or-death decisions in the heat of battle, or should human oversight always remain a requirement?

6. Challenges and Concerns

There are several significant challenges in implementing robots that can make ethical decisions:

- Bias in AI: AI systems are only as unbiased as the data they are trained on. If a robot is trained on biased data—such as medical data that reflects racial or gender disparities—it may make unethical decisions that perpetuate these inequalities.

- Lack of Accountability: If a robot makes an unethical decision, who is responsible? Is it the programmer, the manufacturer, or the robot itself? Establishing accountability is one of the most challenging aspects of ethical robotics.

- Ethical Relativism: Different cultures and individuals have different moral values. What might be considered ethical in one society could be viewed as unethical in another. Programming a robot to navigate these differing moral perspectives without causing harm or offense would be an incredibly difficult task.

- Loss of Human Agency: Allowing robots to make ethical decisions might result in humans relinquishing control over critical aspects of their lives. This loss of agency could undermine personal autonomy and trust in technology.

7. Looking Ahead: The Future of Ethical Robotics

As AI continues to evolve, the idea of robots making ethical decisions will become more realistic, but it will also require more rigorous ethical guidelines and oversight. It is likely that we will see a hybrid approach, where AI assists humans in making decisions but does not replace human judgment altogether.

Ethical robots could revolutionize industries like healthcare, law enforcement, and transportation, making decisions faster and potentially more fairly than humans. However, we must carefully consider the implications of giving robots moral responsibilities. Ethical frameworks will need to be continuously refined, and the role of human oversight will remain crucial to ensure that these machines serve humanity in a way that aligns with our values and principles.

Conclusion

While robots making ethical decisions might sound like science fiction, the potential for such a reality is becoming more tangible as technology advances. However, the road ahead is filled with ethical, philosophical, and technical challenges. Can robots truly understand human morality, or are they simply mimicking ethical behavior based on their programming? For now, the debate continues, and while AI may one day help us navigate moral dilemmas, we must proceed with caution to ensure that technology serves humanity’s best interests.