Introduction: The Age of Mind-Reading Technology

Imagine a world where robots, not just humans, can understand your thoughts. The concept of mind-reading machines has long been a staple of science fiction, from Star Trek to Black Mirror. But how far are we from this seemingly impossible feat becoming a reality? The convergence of neuroscience, robotics, and artificial intelligence (AI) is making this once-fantastical idea more plausible. Mind-reading robots could transform industries, healthcare, and human-robot interaction. But just how close are we to achieving this?

The Science of Mind-Reading: Where Neuroscience Meets Technology

Understanding Brain Signals

Before delving into robots reading minds, we need to understand the science behind the brain’s signals. The human brain operates via electrical impulses that communicate information between neurons. These impulses can be captured through various techniques, such as Electroencephalography (EEG), Functional Magnetic Resonance Imaging (fMRI), and Brain-Computer Interfaces (BCI). While none of these methods offer direct “mind-reading,” they allow scientists to observe brain activity associated with specific thoughts, emotions, and actions.

- EEG measures the electrical activity on the scalp, providing insights into brainwaves.

- fMRI detects changes in blood flow, allowing researchers to pinpoint areas of the brain activated during particular mental tasks.

- BCI translates electrical signals from the brain into actionable data for controlling external devices, such as robotic arms.

Though these technologies have made strides, they are still far from the seamless mind-reading robots of popular culture.

Decoding Thoughts: The Role of Machine Learning

Machine learning (ML) is pivotal in unlocking the potential of mind-reading. Machine learning algorithms analyze massive datasets from brain signals to identify patterns linked to specific thoughts or intentions. In theory, with enough data and sophisticated algorithms, a robot could potentially “interpret” these brain signals, enabling it to predict or even respond to a person’s thoughts.

Example: Researchers at the University of California, Berkeley, demonstrated that by using fMRI scans and machine learning, they could predict images a subject was viewing simply by analyzing their brain activity. While the technology is still in its infancy, these breakthroughs are laying the groundwork for future advancements.

Robotics and AI: The Convergence of Brain and Machine

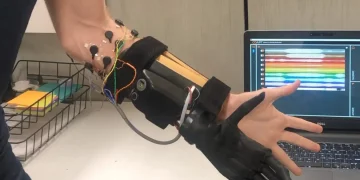

Brain-Computer Interfaces (BCI): The Bridge to Mind-Control

The development of Brain-Computer Interfaces (BCI) represents one of the most significant milestones in human-robot interaction. BCIs allow direct communication between the brain and external devices, bypassing the need for conventional input methods like keyboards or touchscreens.

While BCIs have already allowed some individuals to control prosthetic limbs or type on a screen through thought alone, they are still far from being able to interpret complex mental states or emotions. However, continuous advancements are bringing us closer to that goal.

For example, Elon Musk’s Neuralink is working on developing a high-bandwidth, brain-machine interface that could potentially allow for direct communication between the human brain and robots. Neuralink’s initial focus is on treating neurological conditions, but long-term plans include enhancing human capabilities and enabling mind-machine symbiosis.

AI’s Role in the Future of Mind-Reading Robots

As AI continues to improve, it will become increasingly capable of interpreting complex brain activity and translating it into actionable data. Deep learning, a subset of machine learning, could enable robots to better understand and predict human behavior, moving us closer to robots that can “read” minds in the conventional sense.

Consider OpenAI’s GPT models. While they cannot yet read minds, their ability to understand and generate human language with uncanny precision suggests that, with the right input data, AI could one day be capable of responding to cognitive states in a meaningful way.

As AI advances, it may be able to detect subtle patterns in brain activity that correspond to specific thoughts or emotions. For example, in a future scenario, a robot could discern whether someone is thinking about a specific object, feeling anxious, or anticipating a question before they even speak.

Real-World Applications: How Mind-Reading Robots Could Transform Industries

Healthcare: From Diagnosis to Treatment

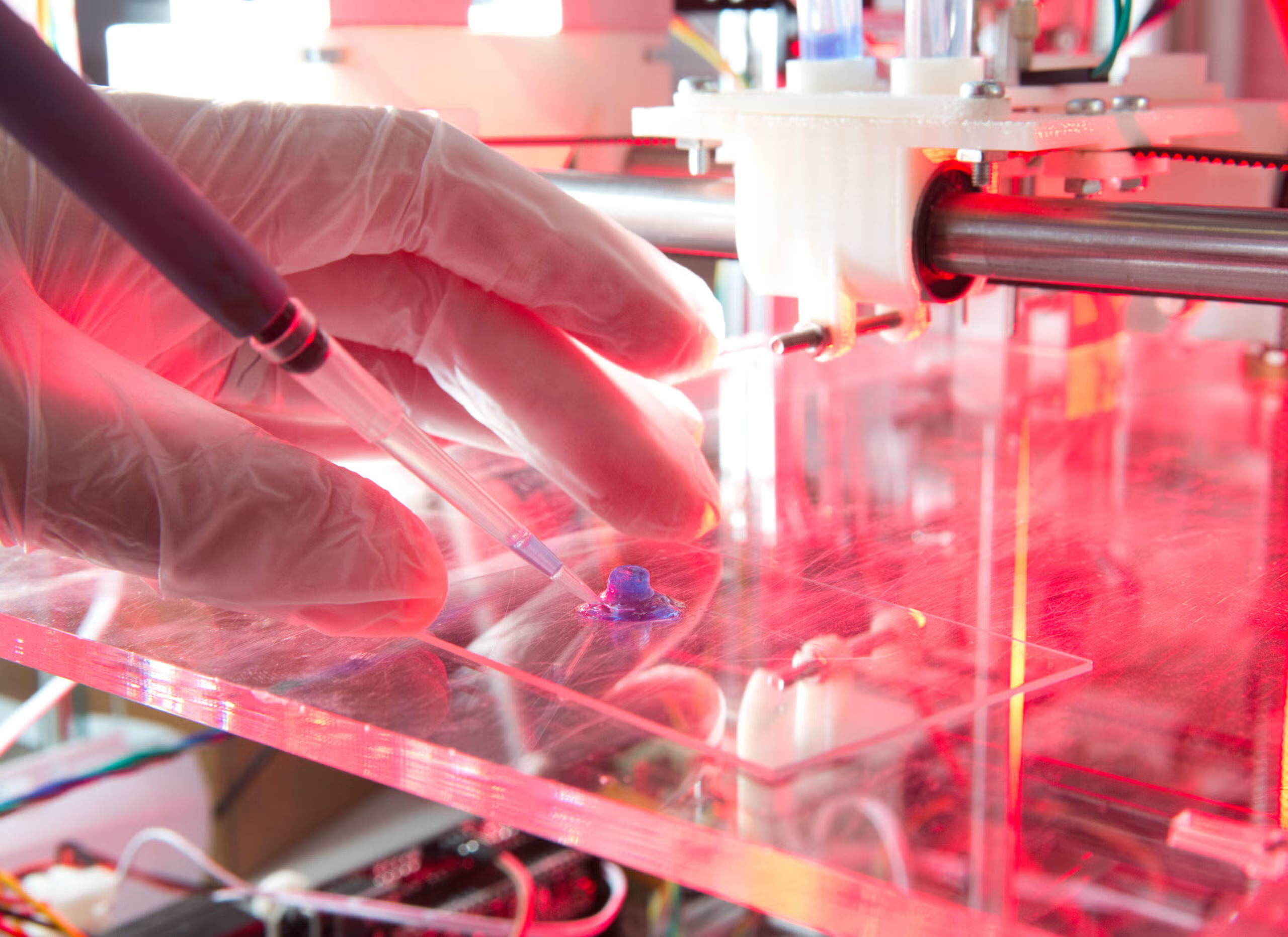

In the realm of healthcare, mind-reading robots could revolutionize how doctors diagnose and treat patients. Neuroprosthetics, which use BCIs to help individuals with paralysis regain control over their limbs, are just the beginning. Future iterations of mind-reading robots could analyze brain activity in real-time to detect early signs of conditions like Alzheimer’s, Parkinson’s disease, or even mental health disorders like depression and anxiety.

Additionally, mind-reading technology could assist in neurosurgery, where robots could work in concert with doctors to execute highly precise procedures by interpreting the brain’s responses in real time.

Education: Personalized Learning Systems

Mind-reading robots could also transform the education sector. By analyzing students’ brain waves, AI-powered robots could determine whether a student is struggling to grasp a concept, experiencing stress, or losing focus. Personalized learning systems could adapt in real-time, offering tailored support based on the student’s cognitive and emotional state.

Moreover, these robots could potentially reduce the gap between different learning styles, providing an individualized educational experience that maximizes each student’s potential.

Entertainment and Gaming: A New Era of Immersion

In entertainment, mind-reading robots could enhance gaming experiences. Imagine a video game that reacts to your thoughts—where the game world adapts in real time to the player’s mental state. VR (Virtual Reality) and AR (Augmented Reality) could become more immersive by interpreting users’ cognitive and emotional states, leading to more dynamic and personalized interactions.

Hollywood may also benefit from mind-reading robots. Filmmakers could potentially use brain scans to gauge audience reactions to scenes, improving the emotional resonance of movies.

Ethical Considerations: The Dark Side of Mind-Reading Robots

Privacy Concerns

As robots become more attuned to our thoughts, questions surrounding privacy become increasingly pertinent. How much access should robots have to our inner cognitive world? In a future where mind-reading is possible, the potential for misuse is vast. Governments, corporations, and malicious actors could exploit mind-reading technology for surveillance, manipulation, or even control.

Imagine a scenario where companies track consumers’ thoughts to predict buying behavior or political views—this is not as far-fetched as it seems, especially when considering the rapid evolution of data collection techniques.

Consent and Autonomy

Ethical concerns surrounding consent also play a significant role. Would individuals have control over which thoughts are shared with robots? Could robots access subconscious thoughts or memories without explicit permission? These questions will likely dominate discussions as mind-reading technology progresses.

Social and Psychological Impact

Another concern is the psychological and social impact of robots knowing our thoughts. Human relationships, trust, and social interactions are already complicated—adding robots into the mix could exacerbate these issues. For example, would people feel comfortable around robots that could predict their every move or intention?

The Road Ahead: Challenges and Future Outlook

Technical Hurdles

Despite significant advancements, building a robot capable of fully understanding and responding to human thoughts remains a monumental challenge. The human brain is staggeringly complex, with billions of neurons and trillions of synapses. Decoding the intricacies of thought, emotion, and consciousness may require new technologies, algorithms, and computational power that are yet to be developed.

Moreover, understanding the why behind brain activity—such as why a person is thinking a certain thought or feeling a particular emotion—adds another layer of complexity.

Ethical Frameworks and Regulation

As mind-reading technology becomes more feasible, robust ethical frameworks and regulations will be essential. International bodies, governments, and tech companies must collaborate to ensure that privacy, consent, and human autonomy are protected. Furthermore, addressing the psychological and social impacts of such technology will be critical to its responsible integration into society.

Conclusion: Mind-Reading Robots—A Reality or Fantasy?

The dream of building robots that can read minds is not as distant as it once seemed. With rapid advancements in neuroscience, AI, and robotics, the possibility is increasingly within reach. However, many technical, ethical, and societal challenges remain before we can truly achieve mind-reading machines.

In the coming decades, we are likely to see significant progress toward robots that can interpret brain signals, understand emotions, and respond to human thoughts in meaningful ways. Whether this leads to a utopian future of enhanced human-robot cooperation or raises new concerns about privacy and autonomy remains to be seen.

One thing is clear: the future of mind-reading robots will challenge the very nature of what it means to be human—and may redefine the boundaries between man and machine.