In the modern age, technology is evolving at an unprecedented pace. Robots, once relegated to the realm of science fiction, are now an integral part of industries ranging from manufacturing to healthcare, and even our daily lives. With this increasing presence of robots, one question stands out: Are we ready to trust robots with our safety? This question is more than just an inquiry into the capabilities of artificial intelligence and robotics; it’s a reflection of our evolving relationship with machines and our willingness to surrender control over aspects of our lives that were once governed by human hands.

The Rise of Robotics and Automation

Robots have come a long way from the clunky, industrial machines designed for simple, repetitive tasks. Today, robotics encompasses a wide range of applications—autonomous vehicles, surgical robots, drones for delivery, and even personal assistants. Each of these advancements represents a leap toward robots assuming more complex roles in society. However, this evolution also brings new challenges, particularly when it comes to trusting these robots with matters of life and death.

One of the most prominent examples of robots taking on safety-critical tasks is the autonomous vehicle. These self-driving cars, powered by intricate algorithms and sensors, are designed to make decisions in real time—decisions that could be life-or-death in nature. The idea of a machine deciding when to brake or swerve can be unsettling for many. Despite the impressive technology behind them, autonomous vehicles have faced accidents and fatalities, raising the question of whether we can ever truly trust these systems.

The Trust Dilemma: Humans vs. Machines

At the core of the question of trust lies a fundamental human dilemma: can we trust something that doesn’t think, feel, or understand in the way we do? Unlike humans, robots do not have instincts, emotions, or a sense of moral judgment. They operate based on algorithms, which are designed by humans but can sometimes malfunction or fail to account for every possible scenario.

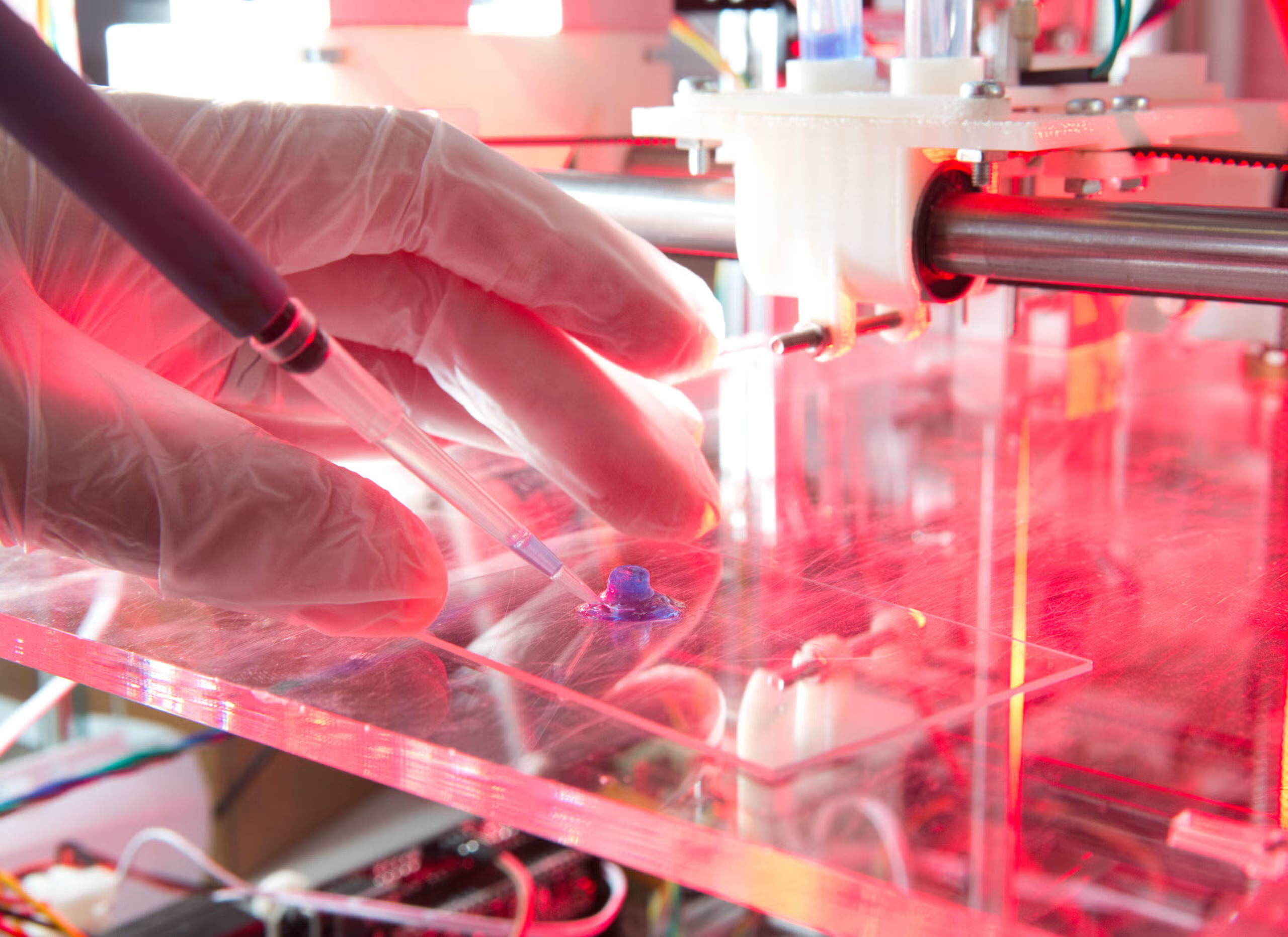

The trust dilemma becomes even more pronounced in life-critical sectors. Take the example of surgical robots. In many modern hospitals, robotic systems assist surgeons during operations. These robots can make precise incisions and perform delicate tasks that require more dexterity than a human hand might offer. However, when a robot makes a mistake—whether it’s due to a software glitch or an unforeseen complication—the consequences can be catastrophic. Surgical robots like the da Vinci Surgical System have proven to be safe and efficient, but how much can we trust them when we’re on the operating table?

The Psychological Aspect: Trusting Machines in Critical Situations

Psychologists have studied the concept of trust in human-robot interaction (HRI), revealing several factors that influence how we perceive and interact with robots. One key element is familiarity. We tend to trust what we know, and since robots are still relatively new to most of us, there’s an inherent wariness towards them, especially in situations that could endanger our lives. As robots become more integrated into daily life, we may see a shift in this dynamic, as people grow more accustomed to their presence and capabilities.

Another aspect of trust is transparency. Humans are more likely to trust a system if they understand how it works. This is why many autonomous vehicle companies are working to make their decision-making processes more transparent to consumers. If we can understand how a robot is making its decisions, we may feel more confident in its ability to keep us safe. However, this transparency also raises concerns about the extent to which we can truly understand the complex algorithms driving these machines.

Robots and Safety: The Pros and Cons

When it comes to safety, robots undoubtedly offer some significant advantages. Consider the following benefits:

- Precision and Accuracy: Robots are capable of performing tasks with a level of precision that humans simply cannot match. This is particularly crucial in fields like surgery, where even the smallest mistake can have dire consequences.

- Reduced Human Error: Human error is a leading cause of accidents, whether on the road or in the operating room. Robots, by contrast, are designed to follow protocols and can operate tirelessly without fatigue, reducing the likelihood of mistakes.

- Risk Reduction: Robots can be deployed in hazardous environments, such as nuclear power plants, space exploration, or disaster zones, where human lives would be at risk. In these cases, robots can take on dangerous tasks, preserving human safety.

- Data Processing: Robots can analyze vast amounts of data far more quickly and accurately than humans, allowing them to make decisions that would be impossible for a person to process in real-time.

However, there are significant downsides as well:

- System Failures and Glitches: As advanced as robots have become, they are still vulnerable to malfunctions. A software glitch or sensor failure can result in catastrophic errors, especially in safety-critical applications.

- Lack of Ethical Judgment: Robots make decisions based on data, but they lack the nuanced ethical judgment that a human might apply in a complex situation. For instance, a robot driving an autonomous car may have to choose between two equally dangerous scenarios—who decides which outcome is morally preferable?

- Vulnerability to Cyber Attacks: As robots become more connected, they also become more vulnerable to hacking. A robot’s failure could be as much the result of malicious intent as a mechanical issue.

- Job Displacement and Dependence: Trusting robots with our safety could also lead to over-reliance on technology. As robots take on more roles traditionally filled by humans, there’s the potential for job displacement, and even more concerning, a loss of critical human skills in areas like surgery, firefighting, and law enforcement.

Case Studies: Real-World Applications

Several high-profile cases illustrate the tension between robotic advancement and human safety.

:max_bytes(150000):strip_icc()/GettyImages-511732538-1b53195d99af43ce838c3e73dbd77d04.jpg)

- Autonomous Vehicles: In 2018, an Uber self-driving car struck and killed a pedestrian in Arizona. The car’s sensors detected the pedestrian, but the system failed to make a decision in time. This incident raised questions about the readiness of autonomous vehicles for mainstream adoption. While the technology has improved, accidents continue to occur, sparking debate about whether human oversight should remain a part of the driving process.

- Surgical Robots: The da Vinci Surgical System has revolutionized minimally invasive surgeries, allowing for more precise and less invasive procedures. However, despite its success, there have been reports of malfunctions, such as issues with robotic arms or software glitches, leading to concerns over the reliability of such machines in critical surgeries.

- Drones in Rescue Operations: Drones are increasingly used in rescue operations, from surveying disaster zones to delivering medical supplies. Their ability to navigate areas unsafe for humans is invaluable, yet their reliance on GPS signals and data processing means that any disruption or error could result in failure, endangering lives.

A Vision for the Future: Striking a Balance

While robots undoubtedly have the potential to improve safety, we are still far from a world where we can fully rely on them. Rather than trusting robots entirely, a more balanced approach is necessary. Human oversight remains crucial in many situations, especially when ethical decisions or complex judgment calls are required. It is not enough to have a robot that can make data-driven decisions; we also need a system that accounts for human values, ethics, and emotions.

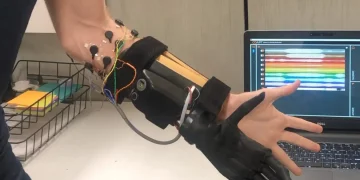

One promising direction is the concept of collaborative robotics or cobots. In this framework, robots work alongside humans, enhancing their capabilities rather than replacing them entirely. For example, a surgical robot might assist a surgeon in making precise cuts, but the surgeon remains in control, making critical decisions during the procedure. This collaborative approach can offer the best of both worlds: the precision and efficiency of robots combined with the judgment and empathy of humans.

The Road Ahead: Building Trust in Robotic Systems

To ensure that we are truly ready to trust robots with our safety, several steps need to be taken:

- Improved Transparency: Making robot systems more transparent and understandable will help the public feel more comfortable with their use in critical situations.

- Better Regulations and Standards: Governments and industry leaders must collaborate to develop strict standards for safety, ethics, and accountability in robotic systems.

- Enhanced Safety Protocols: Continuous improvements in robotic technology—particularly in fail-safes and backup systems—will help mitigate the risks associated with robotic failures.

- Human-Centered Design: Focusing on human needs and capabilities will ensure that robots complement and support human decision-making, rather than replacing it altogether.

Conclusion: Trust, But Verify

The future of robots and safety is both exciting and fraught with challenges. While robots are making impressive strides in improving safety across industries, we must approach their integration into safety-critical domains with caution. Trusting robots with our safety is not a question of if, but when—and under what conditions. By combining the strengths of both human and machine intelligence, we can create a future where robots enhance, rather than endanger, our safety.