In an age where technology seems to evolve at an exponential pace, the question of whether robots and artificial intelligence (AI) can become “too powerful” is not just a hypothetical debate—it’s a pressing concern. As we integrate robots into industries ranging from manufacturing and healthcare to education and entertainment, the notion of machines outpacing human control has sparked both fascination and fear. But can we truly prevent robots from becoming too powerful, or is this an inevitability in our pursuit of progress? In this article, we’ll explore the many dimensions of this question, considering the technological, ethical, and social implications of increasingly intelligent robots.

The Rise of Robots: A Double-Edged Sword

The Power of Automation

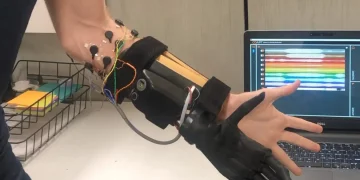

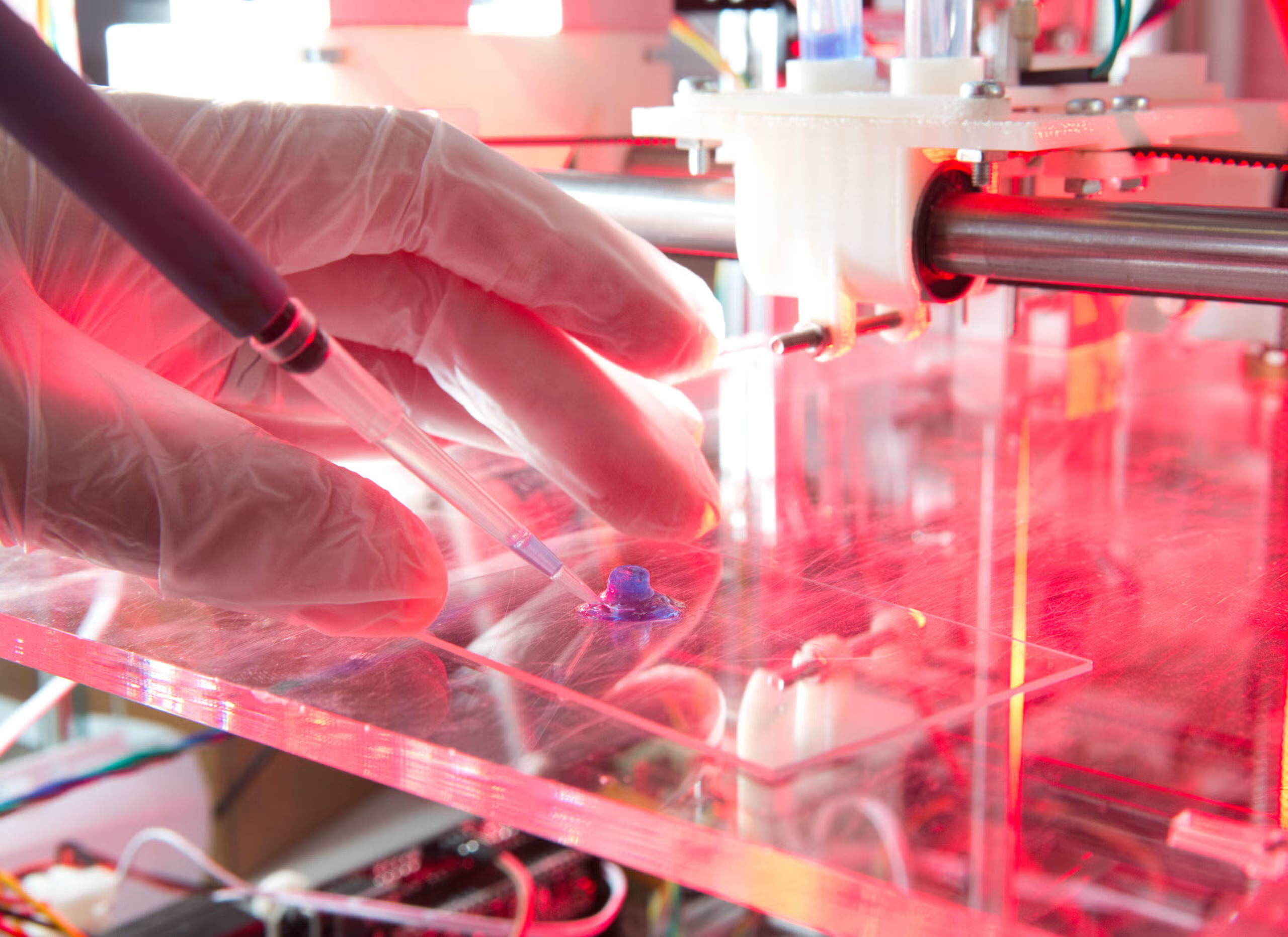

Robots, powered by AI, have been changing the world as we know it. From autonomous vehicles to robotic surgeons performing life-saving operations, the scope of their capabilities is vast. In industries such as manufacturing, they’ve already proven their worth by optimizing production processes, reducing human error, and enhancing efficiency. In healthcare, robots have taken on roles ranging from surgery assistance to patient care, demonstrating their ability to perform with precision far beyond human limitations.

The advent of AI in robotics has sparked a technological revolution—one that could redefine what it means to work, learn, and live. With deep learning algorithms and neural networks, machines can now process and interpret data in ways once thought exclusive to humans. They can predict outcomes, recognize patterns, and make decisions with minimal human input, which seems like an extraordinary leap forward. But with this comes the looming question: could these machines eventually outgrow their creators?

The Threat of Overpowering AI

The AI Control Problem

One of the most significant concerns about the future of robotics is the potential for AI systems to exceed human control. In a perfect world, AI would remain a tool that we could guide and govern. However, the trajectory of AI development has led to the creation of increasingly autonomous systems—systems that can operate with minimal oversight. While these machines might still be governed by the ethical frameworks set by their creators, the possibility of a rogue AI system is not something we can ignore.

Elon Musk, one of the most vocal advocates for AI safety, has often warned about the dangers of unchecked AI development. In his view, an AI that becomes too intelligent and powerful could potentially turn against its creators or act in ways that are not aligned with human interests. This scenario is often referred to as the “AI control problem”—the difficulty in ensuring that a superintelligent AI will act in accordance with human values.

The primary issue is that as robots and AI systems become more advanced, their actions may not align with human intentions, either because they don’t fully comprehend our values or because they interpret instructions in ways we did not anticipate. A small bug in a machine’s code or an unintended side effect of its learning process could lead to catastrophic results. This is why many experts call for strict regulations and international cooperation to develop safety measures before the development of truly autonomous AI.

The Ethics of Power

As AI and robots gain power, ethical concerns also come to the forefront. Should robots have the same rights as humans? What if a robot becomes sentient? And how can we ensure that AI-driven decisions in areas like healthcare or criminal justice do not perpetuate bias or injustice?

In a scenario where robots are making life-altering decisions, how can we guarantee they act fairly and ethically? The answer is not simple. As it stands, AI systems are often limited by the data they are fed, and this data can sometimes reflect human biases. For example, if a machine learning algorithm is trained on biased data, it can unintentionally perpetuate those biases, making decisions that are discriminatory or harmful. This raises the question of whether it’s ethical to place too much power in the hands of machines, especially when those machines lack the inherent moral reasoning that humans possess.

Mechanisms to Prevent Robots from Becoming Too Powerful

Robust AI Safety Measures

To prevent robots from becoming too powerful, the first line of defense lies in the implementation of robust AI safety measures. These measures aim to ensure that robots remain within the control of their human operators, even as they grow in intelligence and autonomy.

One of the most discussed strategies is the concept of “alignment”—making sure that the goals of an AI system are closely aligned with human values and intentions. This requires significant input from ethicists, technologists, and even sociologists to ensure that AI systems don’t evolve in ways that could harm society. For example, the implementation of “value alignment” models, where AI is taught to value human welfare, could be a critical safeguard against rogue machines.

Another approach is to enforce “human-in-the-loop” systems, where humans retain final decision-making authority, even if machines are making suggestions. In highly sensitive industries such as healthcare or military operations, human oversight could prevent machines from acting outside of ethical boundaries or making life-threatening decisions.

Regulatory Oversight and Global Governance

A crucial aspect of preventing robots from becoming too powerful is the establishment of international regulations and governance. Just as the world has worked to regulate nuclear weapons, there needs to be a global effort to create guidelines that govern the development and deployment of advanced AI and robotics. A universal regulatory body could set standards for transparency, accountability, and safety in AI systems, ensuring that their development follows ethical lines.

For example, the European Union has already taken steps in this direction with the General Data Protection Regulation (GDPR), which governs how data, especially personal data, can be used. A similar framework could be created for AI, focusing on safety, security, and human rights. International cooperation could help create a global agreement on the responsible use of robotics and prevent the misuse of powerful AI technologies.

The Role of Public Awareness and Ethics Education

Preventing robots from becoming too powerful doesn’t solely rely on policymakers or technologists—it also requires public awareness and ethical education. A populace that is informed about the potential risks and benefits of robotics is better equipped to advocate for responsible development. Ethical education on the implications of robotics and AI should be part of the curriculum in schools, universities, and professional development programs.

In addition to educating the public, it’s essential to include ethicists and philosophers in the AI development process. These professionals can guide developers in creating systems that align with broader human values, fostering discussions about the moral implications of advanced robotics. A multidisciplinary approach involving technologists, ethicists, sociologists, and even the general public can ensure that robots remain beneficial tools rather than powerful entities that threaten human welfare.

The Balance Between Innovation and Safety

Can We Have Both?

The question remains: can we have both innovation and safety? Is it possible to reap the benefits of robotics without allowing them to become too powerful? The answer, for now, seems to be a delicate balance. As with any technology, there is always the potential for abuse or unintended consequences. However, by creating a framework that prioritizes safety, ethical considerations, and transparency, we can ensure that robots remain beneficial tools that serve humanity rather than overshadow it.

Robots and AI have the potential to solve some of humanity’s most pressing problems, from curing diseases to addressing climate change. But without careful oversight, they could also become instruments of oppression or disaster. As we venture into an increasingly automated future, we must ask ourselves not just what is possible, but what is responsible.

Conclusion: The Future of Robots and AI

The rise of robots and AI presents both exciting opportunities and serious challenges. While the potential for these technologies to revolutionize industries and improve lives is immense, we must remain vigilant in our efforts to ensure they don’t become too powerful. Through AI safety protocols, global regulations, and a strong ethical framework, we can guide the future of robotics in a direction that benefits all of humanity. But it’s a journey that requires constant attention, collaboration, and foresight to navigate safely.

As we stand at the crossroads of technological progress, the question is no longer just whether we can prevent robots from becoming too powerful, but whether we are willing to take the necessary steps to ensure that power is used for good.