In a world where robots and artificial intelligence (AI) are becoming an integral part of our daily lives, the question of ethics has surfaced as one of the most pressing concerns. From self-driving cars to medical robots, these machines are expected to make decisions—often complex ones—that could have profound implications on human lives. But what happens when these robots are tasked with making ethical decisions?

This article dives deep into the nuances of ethical decision-making by robots, exploring what it means for machines to “choose” between right and wrong, the challenges involved, and the potential consequences for society.

The Intersection of Ethics and Robotics

Ethics, traditionally a domain of human philosophy, deals with questions of right and wrong, justice, and morality. However, as machines become more autonomous and capable of complex tasks, the ethical responsibilities they bear have come into sharp focus.

Take the example of a self-driving car. Imagine it’s faced with a sudden emergency: it must make a decision to either hit a pedestrian or swerve and crash into a wall, potentially injuring the passenger inside. In this scenario, the robot must weigh the lives of individuals. What ethical principles should guide its decision? Utilitarianism? Deontological ethics? Or a new framework entirely?

As machines increasingly take over decision-making roles, ethical considerations evolve from abstract theory to practical application, raising questions like:

- Who is responsible when a robot makes an unethical choice?

- How do we program ethics into machines?

- Should robots be allowed to make life-altering decisions?

Theories of Ethics and Their Application in Robotics

When robots are designed to make decisions, engineers and ethicists often rely on well-established ethical theories. These theories attempt to answer the age-old questions about morality and decision-making. Here’s how some of them can be applied to robots:

1. Utilitarianism: The Greatest Good for the Greatest Number

Utilitarianism, famously championed by philosophers like John Stuart Mill, advocates for actions that maximize overall happiness or minimize suffering. In robotic terms, this could mean designing AI to always choose the option that benefits the most people.

In the case of self-driving cars, for instance, a utilitarian approach might dictate that the car should swerve to avoid a group of pedestrians, even if it means risking the life of the single passenger inside. The calculation is simple: the greater good is saving more lives.

However, applying utilitarianism to robots isn’t without its complications. Different societies have different values, and what constitutes “the greatest good” can be subjective. There is also the issue of how to quantify happiness or suffering in a way that a machine can understand.

2. Deontological Ethics: Duty Over Consequences

Deontological ethics, rooted in the philosophy of Immanuel Kant, argues that morality is not just about outcomes but also about following moral rules or duties. In this framework, certain actions are morally required, regardless of their consequences.

For a robot, this could mean following strict ethical rules, such as never causing harm to a human, no matter the situation. In the case of the self-driving car, a deontological robot might choose not to swerve into the wall, even if it results in greater harm, because the rule prohibits causing harm to human beings.

While deontology provides clarity and predictability, it can be rigid in complex, real-world situations. If a robot strictly follows its programmed duties, it may not always act in a way that humans consider “moral” or “just.”

3. Virtue Ethics: The Character of the Decision-Maker

Virtue ethics, developed by Aristotle, focuses on the character of the decision-maker rather than the consequences of their actions. In this model, an action is morally right if it is what a virtuous person—someone who exhibits traits like compassion, courage, and wisdom—would do.

In the case of robots, virtue ethics could guide them to act in a manner that aligns with human values of kindness, empathy, and wisdom. This might mean that robots would be trained to recognize complex human emotions and choose actions based on the best possible outcome for individual well-being.

However, programming virtues into robots is a formidable challenge. Virtue ethics relies heavily on context and judgment, which can be difficult for machines to emulate.

The Challenge of Programming Ethics Into Machines

While ethical theories provide a framework, implementing these ideas into a robot’s decision-making process is far from simple. There are several challenges that engineers and ethicists face when programming robots to make ethical decisions.

1. Moral Uncertainty

One of the primary issues is moral uncertainty. In many situations, there is no clear “right” or “wrong” answer. Ethical dilemmas often involve conflicting principles, where no decision satisfies all moral criteria. A robot that must choose between two morally conflicting actions might not be able to arrive at a definitive conclusion, especially if it’s required to act in real-time.

For example, in a situation where a self-driving car is faced with the choice of saving the driver or a group of pedestrians, a robot would be forced to evaluate conflicting ethical imperatives: the value of individual life versus the value of saving multiple lives. Moral uncertainty increases when considering scenarios that involve more complex human values, such as emotional well-being.

2. Bias in Data and Programming

Another major challenge is bias. Robots, particularly those powered by AI, learn from data. If the data fed into the system is biased—whether in terms of race, gender, or socio-economic status—the decisions made by the robot could inadvertently reinforce those biases. In ethical decision-making, this can be catastrophic, leading to unfair treatment or harm to certain individuals or groups.

Efforts to mitigate bias in AI are underway, but these biases remain a significant concern when robots are making critical, ethical decisions that affect people’s lives.

3. Accountability and Responsibility

Who should be held responsible when a robot makes an unethical decision? Should it be the engineers who programmed the robot, the manufacturers, or the users of the robot? In some cases, ethical violations may occur because of faulty programming, unforeseen circumstances, or limitations in the robot’s design.

Legal frameworks around AI and robotics are still developing, and determining accountability for a robot’s actions remains a complex and unresolved issue. The question of “who is responsible” takes on added weight when the decisions made by robots impact human lives.

Case Studies: Ethical Dilemmas in Action

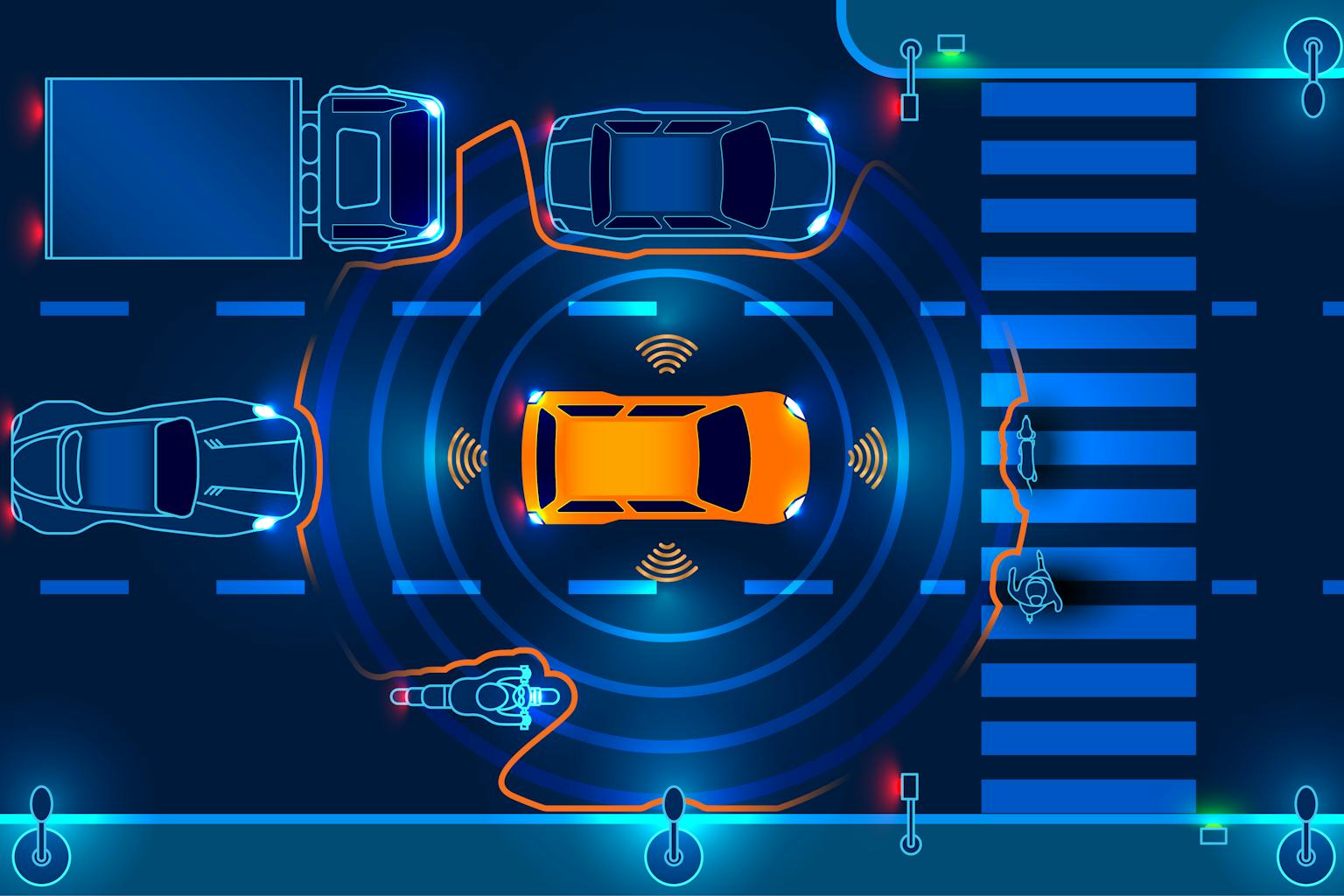

1. Self-Driving Cars

Perhaps the most widely discussed example of robots making ethical decisions comes from the realm of self-driving cars. In 2016, a self-driving car was involved in a fatal accident in Arizona, where the vehicle struck and killed a pedestrian. The incident raised difficult questions about the ethical framework governing autonomous vehicles.

Should the car have made a different decision? What ethical guidelines should the car’s AI follow when faced with an unavoidable collision? The dilemma intensifies when considering that the car was also programmed to prioritize the safety of its passenger.

2. Medical Robots

In healthcare, robots like surgical assistants and diagnostic tools are becoming common. These robots often help doctors in making crucial decisions. But should robots be allowed to make life-or-death decisions without human oversight? And if so, who decides what ethical guidelines govern these robots’ actions?

For example, imagine a robot tasked with allocating limited medical resources during a crisis, such as an organ transplant or ICU bed. The robot must decide who gets the treatment and who doesn’t. Should the robot prioritize the person with the best chance of survival, or should it treat all equally?

3. Military Drones

Another area where robots are making ethical decisions is in the military. Autonomous drones, capable of launching attacks without human intervention, present significant ethical concerns. When a drone decides to engage a target, how does it assess the moral justification of the attack?

Should a robot soldier be allowed to make decisions that could lead to loss of life, especially when the human operator is not involved in the decision? The ethical implications of robotic warfare are vast, and the international community is grappling with how to regulate autonomous weapons.

What Does the Future Hold?

As AI continues to advance, the ethical decisions made by robots will only grow in complexity. In the coming years, we may see widespread use of robots in high-stakes fields such as law enforcement, healthcare, and military operations. The decisions they make could have far-reaching consequences for humanity.

As we move forward, several steps must be taken:

- Ethical Guidelines for AI Development: Governments and organizations must collaborate to establish ethical frameworks for AI development and deployment.

- Public Discourse: We need open conversations about the moral implications of robots making decisions that affect human lives.

- Human Oversight: Despite the advances in AI, human oversight should remain integral to decision-making processes, ensuring that robots act within ethical and legal boundaries.

Conclusion: Navigating the Uncharted Waters

The notion of robots making ethical decisions is no longer a distant theoretical concept—it’s an urgent reality. As AI becomes more autonomous, the need for clear ethical frameworks, robust programming, and transparent regulations becomes paramount. As we navigate this uncharted territory, the role of ethics in robotics will shape not only the future of technology but also the future of humanity.