In the rapidly advancing world of robotics and artificial intelligence (AI), one question that lingers at the intersection of technology and philosophy is: Could we build robots with human emotions? The notion of robots possessing emotions is not confined to the realm of science fiction, as it has become a serious subject of study in robotics, AI, and cognitive science. While emotions are often considered uniquely human, there is a growing belief that it might be possible to simulate or even replicate these feelings in machines. In this article, we will explore the potential, challenges, and ethical considerations surrounding the development of emotionally intelligent robots.

The Emotional Landscape of Humans

To understand the complexity of building robots with emotions, we first need to look at human emotions. Emotions play a crucial role in human experience, influencing decision-making, social interactions, and personal well-being. They are typically classified into basic categories such as happiness, sadness, fear, anger, surprise, and disgust, but can be much more nuanced. Emotions help us navigate the world, communicate with others, and adapt to changing circumstances.

In cognitive science, emotions are often viewed as an evolutionary adaptation that provides us with the ability to respond rapidly to environmental stimuli. For example, fear can trigger a fight-or-flight response to danger, while happiness can reinforce positive behaviors. These emotional responses are rooted in both biological processes (such as hormone secretion) and cognitive assessments (such as evaluating a situation as safe or threatening).

But how do these processes apply to robots? To replicate human-like emotions in machines, we must break down the core components of emotions into two areas: sensing and responding.

Can Robots Feel Emotions?

The first and most important question is: Can robots truly feel emotions? The short answer is: no, at least not in the way humans do. Emotions in humans are driven by subjective experiences, influenced by biological factors like the brain, hormones, and genetics. Robots, on the other hand, do not have the physiological systems that give rise to emotions. They are machines designed to process information and perform tasks based on algorithms.

However, emotions are not purely biological. They can be seen as a complex series of responses to stimuli that allow an organism to navigate the world effectively. In this sense, robots can be programmed to simulate emotions, based on sensors, data inputs, and machine learning algorithms. But is simulation enough? Can these machines appear to have emotions, even if they do not truly experience them?

The Development of Emotion-Sensitive Robots

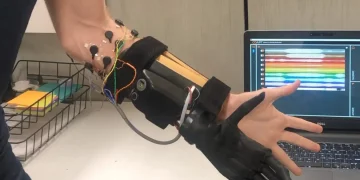

In recent years, engineers and AI researchers have made significant strides toward building robots that can mimic human emotional responses. These robots use a combination of sensors, artificial intelligence, and machine learning to recognize and respond to human emotions. For instance, robots can analyze facial expressions, tone of voice, and body language to detect emotions such as happiness, sadness, or anger.

Emotion Recognition

Emotion recognition technologies are at the core of creating emotionally intelligent robots. These systems employ algorithms that analyze data from various sources—such as facial expressions, voice intonation, and even physiological signals like heart rate or skin conductivity—to infer emotional states. Facial recognition software, for example, is capable of identifying micro-expressions, the subtle changes in facial muscles that reflect emotions. By combining these technologies with natural language processing (NLP), robots can interpret verbal and non-verbal cues to understand how a person is feeling.

Emotion Simulation

Once a robot can recognize human emotions, the next step is to simulate its own emotional responses. This is where things get more complex. While robots cannot feel emotions in the human sense, they can be designed to respond to emotional cues in ways that mimic human behavior. For example, a robot might display a smiling face when detecting happiness or express concern through its body language when sensing sadness.

Researchers have even gone a step further by equipping robots with emotional avatars—virtual representations that convey emotions through text or visual display. For example, a chatbot might be programmed to use kind and empathetic language when interacting with someone who is upset. Robots designed for healthcare or elder care might express simulated empathy to provide comfort, even though the machine itself does not experience those feelings.

The Role of Artificial Emotional Intelligence

The concept of Artificial Emotional Intelligence (AEI) has become increasingly relevant in the field of robotics. AEI involves the creation of algorithms and systems that allow machines to perceive, interpret, and respond to human emotions in a way that is emotionally appropriate. AEI is an extension of traditional AI but with an emphasis on emotional data rather than purely logical or rational information.

Affective Computing, a subfield of AEI, focuses specifically on the study and development of systems that can detect, interpret, and simulate emotions. Researchers in this field aim to create robots that not only recognize human emotions but also adapt their behavior in response to emotional contexts. This could lead to robots that can adjust their responses based on whether a person is frustrated, sad, or excited.

Practical Applications of Emotionally Intelligent Robots

The potential applications of robots with emotional intelligence are vast and wide-ranging. Some of the most promising areas include:

Healthcare and Elder Care

Emotionally intelligent robots could revolutionize healthcare, especially in areas like elderly care. Many elderly individuals experience loneliness and depression, and robots with the ability to simulate empathy and respond to emotional cues could provide companionship and improve their quality of life. Paro, a robot designed to resemble a baby seal, is already being used in Japan’s nursing homes as a therapeutic companion. The robot responds to touch and vocalizations, mimicking the behavior of a living animal, providing comfort to residents.

In hospitals, emotionally intelligent robots could assist patients by recognizing distress or pain and responding with appropriate comfort or intervention. For example, a robot in a pediatric ward could recognize a child’s anxiety and offer soothing words or engage in playful activities to distract the patient.

Education and Child Development

In the field of education, robots with emotional intelligence could be used to teach children, particularly those with special needs. Children with autism spectrum disorder (ASD) often struggle with recognizing and expressing emotions. Robots designed to simulate emotions could serve as tools for helping these children develop social and emotional skills. For example, a robot could model facial expressions and provide feedback to children as they practice interpreting emotions.

Customer Service and Companionship

Emotionally intelligent robots could also find their place in customer service, where empathy and social intelligence are essential. A robot programmed to recognize frustration or dissatisfaction in a customer could adjust its tone or offer more assistance to ensure a positive experience. In entertainment, robots with emotional intelligence might act as companions, offering personalized interactions and companionship for people who seek social interaction with artificial entities.

Ethical Considerations

While the idea of emotionally intelligent robots holds immense promise, it also raises several ethical concerns that must be addressed before widespread adoption.

Deception and Manipulation

One of the most significant ethical concerns is the potential for robots to deceive humans into thinking they are experiencing genuine emotions. If a robot behaves empathetically or expresses joy in response to human emotions, could this lead to a false sense of connection? Some argue that such emotional simulations might exploit human vulnerabilities, especially in cases where people may form emotional attachments to these machines.

Impact on Human Relationships

The widespread use of emotionally intelligent robots might also have unintended consequences on human relationships. If robots can simulate emotions, could this diminish the need for genuine human connection? There’s a fear that people might begin to rely on robots for companionship, replacing human relationships with machines.

Bias and Misinterpretation

Emotion recognition systems are not perfect. They might misinterpret a person’s emotional state based on incomplete or biased data. This could lead to robots responding inappropriately, such as offering comfort when it’s not needed or misjudging a person’s emotional state entirely. Ethical questions about accountability and responsibility arise when machines fail to interpret human emotions accurately.

Privacy and Data Security

As emotionally intelligent robots rely heavily on sensors to gather emotional data, concerns about privacy and data security become paramount. The data collected by robots could be used to infer personal information about individuals’ emotions, behavior, and even their mental health. Proper safeguards must be in place to ensure that this data is not misused or accessed without consent.

The Future of Emotionally Intelligent Robots

The future of robots with human-like emotions is both exciting and fraught with challenges. As technology advances, we may see robots that are increasingly capable of understanding, simulating, and responding to human emotions. While these robots will not “feel” emotions as humans do, they will serve as powerful tools to assist in healthcare, education, and customer service, among other fields.

However, as we continue to develop emotionally intelligent robots, it is crucial to balance innovation with ethical considerations. Transparency, accountability, and human oversight will be essential to ensuring that these technologies are used responsibly and do not undermine genuine human connection.