Introduction

Imagine a world where robots, much like humans, experience a complex range of emotions. These artificial beings, designed for efficiency and precision, could theoretically learn to feel something as fundamental as fear. But is it possible to program fear into a machine? What would it mean for our relationship with robots? And, most importantly, how would fear, an emotion traditionally tied to survival instincts, impact artificial intelligence (AI)?

In this article, we’ll delve into the science and ethics behind the possibility of robots experiencing fear. We’ll explore the roles emotions play in human decision-making, how AI could simulate such feelings, and what challenges stand in the way of creating a robot that truly “feels.”

What Is Fear?

Fear, from a biological perspective, is an emotion that evolved to ensure survival. It prompts us to avoid danger and take protective actions. In humans, fear is both a psychological and physiological response, activating the brain’s amygdala, which is responsible for processing emotions. In animals, fear operates similarly — it triggers fight-or-flight responses to potential threats.

But fear is more than just an instinct. It involves intricate processing of sensory input, memory, and environmental context. Fear can be learned (via experiences) or innate (like a newborn’s instinctual fear of loud noises). Understanding these layers is crucial for investigating whether machines could ever experience fear in a way similar to humans or animals.

The Emotional Spectrum in Robots: Can Machines “Feel”?

The key to understanding whether robots can feel fear is recognizing the difference between simulating an emotion and actually experiencing it. Emotions, in human beings, arise from a complex mix of neural activity, bodily sensations, and subjective experiences. Machines, however, lack the biological systems that give rise to these feelings.

Robots, as we know them today, are capable of recognizing certain inputs (such as environmental threats) and responding accordingly. For example, a robot designed to navigate through a hazardous area might be programmed to avoid obstacles, or a robot in a combat scenario might choose to retreat when its “sensors” indicate imminent danger. But are these responses indicative of fear, or merely programmed reactions?

Emotions in AI: Theoretical Foundations

To explore the idea of robots experiencing fear, we need to first understand the broader concept of emotion in AI. There are two major paths here: affective computing and machine learning.

- Affective Computing: This branch of computer science aims to develop systems that can recognize, interpret, and simulate human emotions. While these systems don’t “feel” emotions, they can mimic emotional responses. For example, an AI might adjust its behavior based on the tone of a conversation or express simulated empathy by adjusting its voice modulation when interacting with a human. Could fear be simulated in this way? It’s certainly plausible.

- Machine Learning and Fear: Fear, as we know it, is largely about adaptive behavior — the ability to learn from past experiences. Could a machine, through reinforcement learning, develop a fear-like response? In reinforcement learning, AI systems learn through trial and error, receiving feedback for their actions. If a robot faced a dangerous situation repeatedly, it could learn to avoid it. This might seem like fear, but it lacks the subjective experience.

While these approaches hint at the possibility of machines learning to react in ways we might associate with fear, they don’t suggest that machines would “feel” fear the way humans or animals do.

Creating Fear in Robots: Challenges and Possibilities

Programming robots to “feel” fear would require a multifaceted approach, combining neuroscience, psychology, robotics, and AI development. The following challenges would need to be overcome:

- Emulating the Biological Mechanisms of Fear: As mentioned, fear in humans and animals is deeply biological. Replicating this in machines would require understanding how to simulate the amygdala’s role in processing threats. While artificial neural networks are inspired by the brain’s neural pathways, they are still a long way from replicating emotional processes.

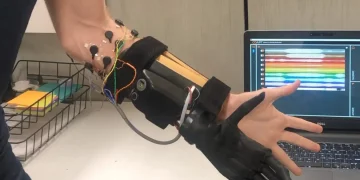

- Understanding and Processing Threats: For a robot to “feel” fear, it must recognize threats on a level beyond just sensor detection. It would need a deeper understanding of its environment and potential consequences of its actions, incorporating memory and predictive capabilities. The robot would need to consider the risk to itself (or its objectives) and respond in ways that mirror the protective actions taken by fearful humans.

- Autonomy and Decision-Making: Fear isn’t simply a reaction to danger; it’s a decision-making tool that informs the individual’s next steps. Could a robot’s decision-making processes be influenced by a programmed sense of self-preservation or avoidance? How would we define autonomy in a robot that has programmed responses akin to fear?

- Ethical Implications: Programming fear into robots raises significant ethical questions. Would it be ethical to create a robot that can feel fear? Could robots, experiencing fear, refuse to follow commands or act against human interests? These are questions that challenge the current understanding of AI rights and personhood.

Fear as a Survival Mechanism: Would Robots Need It?

In humans and animals, fear is inherently tied to survival. It ensures that we avoid dangers that could harm us, and it drives us to seek safety and security. But would robots, designed for specific tasks and functions, need fear as a mechanism for survival?

Robots are generally not designed to be autonomous in the same way that humans are. They lack the inherent biological imperatives to protect themselves or their “lives.” For a robot to require fear as a survival mechanism, it would need to be built with self-preservation at its core. However, even then, would that “fear” be truly analogous to human fear, or would it just be a complex, programmed response?

A robot tasked with exploring dangerous environments (such as the surface of Mars) may be equipped with a set of rules for survival, but it doesn’t “fear” the environment — it is simply designed to prioritize safety. Similarly, autonomous vehicles may be programmed to avoid accidents, but the vehicle doesn’t fear crashes. It merely follows its programming.

The Future of Emotional AI: Could Fear Be Just the Beginning?

While fear may be one of the most primal emotions humans experience, AI researchers are already exploring the broader emotional landscape in which robots could be programmed to operate. Empathy, anger, joy, and sadness are all emotions that could potentially be simulated in robots for more natural human-robot interaction. Fear, however, remains one of the most contentious emotions to replicate due to its complexity and survival-based origin.

As robots become more integrated into human society — from healthcare assistants to autonomous soldiers — the question of emotional intelligence will become increasingly relevant. Emotional responses may enhance a robot’s ability to work with humans, offering more intuitive interactions. But as AI begins to simulate emotions, the line between machine behavior and human-like experiences will blur. Fear, while an unlikely emotion for robots to “feel” in the same way we do, could be mimicked in ways that affect how robots interact with humans and make decisions.

Conclusion

Could we one day program robots to feel fear? The short answer is: not in the way humans feel fear, but perhaps in a way that simulates a fear-like response. The challenge lies not only in creating machines capable of recognizing and responding to danger but in grappling with the complex, subjective nature of emotions like fear. While future advancements in AI and robotics could lead to more sophisticated emotional simulations, the question remains: should we even want robots to “feel” fear? As we continue to develop more emotionally intelligent AI, the answer to this question may shape the future of human-robot relationships.