Introduction: The Ethical Dilemma of Machines

In a world where artificial intelligence (AI) and robotics are rapidly advancing, the question of whether robots can be ethical is becoming increasingly important. As these machines gain capabilities that mimic human decision-making, it’s not just a matter of technical proficiency but also moral accountability. Can a robot ever truly possess ethics? And if so, what does that mean for the future of our relationship with machines?

Ethics, at its core, is about making decisions that align with moral principles. For humans, these principles are often rooted in culture, religion, philosophy, and personal experiences. But when it comes to machines, we must ask: can something that lacks consciousness and emotion understand or even follow ethical guidelines? And if robots are designed to make ethical decisions, can they do so without bias, error, or harm?

The Ethics of Robotics: A Brief Overview

The term “robot” comes from the Czech word robota, meaning forced labor. Since its inception in the early 20th century, the idea of robots has evolved from simple machines designed to perform repetitive tasks to complex, intelligent systems capable of performing high-level functions. As they become more integrated into society, the question of ethics becomes more pressing.

The concept of ethical robots has been widely explored in science fiction, with Isaac Asimov’s “Three Laws of Robotics” being one of the most famous frameworks. According to Asimov, a robot must:

- Never harm a human being or allow one to come to harm.

- Obey the orders given to it by humans, unless such orders conflict with the First Law.

- Protect its own existence, as long as such protection does not conflict with the First or Second Laws.

These rules were designed to ensure that robots would act in ways that were beneficial and safe for humanity. However, as technology progresses, the simple notion of a machine following rules becomes more complicated. Robots now interact with humans in more nuanced ways and must make complex decisions that go beyond following basic commands.

Challenges in Programming Ethics

One of the central challenges in programming robots to be ethical lies in the ambiguity and complexity of human morality. Ethical decisions are rarely clear-cut. Take, for example, a self-driving car. If a situation arises where an accident is unavoidable, should the car prioritize the safety of its passengers over pedestrians or vice versa? This is known as the trolley problem, a classic ethical dilemma.

While humans can weigh the moral implications of such decisions based on their values, how can a robot be programmed to make the “right” choice? And more importantly, whose ethics should guide the decision-making process? In a globalized world, where moral views vary significantly across cultures, the notion of universal ethics becomes even more elusive.

Robots as Tools: The Role of Human Oversight

One argument against robots having true ethics is that they are, at their core, tools created by humans. Their actions, no matter how complex, are ultimately a reflection of the intentions and biases of their creators. As such, any ethical decision-making by a robot is simply a replication of human-designed systems and rules.

For example, a robot designed to assist the elderly might be programmed with certain ethical guidelines, such as respecting the autonomy of the person it is helping. However, the robot’s behavior is still determined by the values of its programmers and designers. Moreover, these systems might unintentionally encode biases—such as gender, racial, or socioeconomic biases—into the robot’s behavior. This is particularly troubling because, unlike humans, robots can act on these biases without conscious awareness.

Therefore, the ethical question might not be whether robots themselves can be ethical, but rather whether the people who design and control them are ethical. Should robots be held accountable for actions that were pre-programmed, or should responsibility always fall on the creators?

The Ethical Frameworks for Robots

If robots are to be held accountable for ethical decisions, then frameworks for ethical programming must be developed. These frameworks must address several key areas:

1. Autonomy vs. Control

How much autonomy should robots have in making ethical decisions? Some argue that robots should always be under human control to ensure that their actions align with human values. However, giving robots too much autonomy could lead to unpredictable outcomes. For example, a robot might interpret a moral rule in a way that humans did not intend. Others suggest that robots should have the ability to make decisions independently, but under certain guidelines and checks to ensure they do not cause harm.

2. Transparency

For a robot to make ethical decisions, its decision-making process must be transparent. People must be able to understand how a robot arrived at a specific conclusion. This transparency would allow humans to review and correct decisions if necessary, ensuring accountability. However, this poses another challenge: the more complex the robot’s decision-making process becomes, the harder it is to understand or predict.

3. Context Sensitivity

Ethical decisions are rarely made in a vacuum. They depend heavily on the context in which they occur. For instance, a robot operating in a healthcare setting might need to prioritize patient privacy while balancing the need to share information with other medical professionals. A robot in a military context might face situations where ethical considerations conflict with tactical objectives. Designing robots that can understand and adapt to these nuanced contexts is a major challenge for ethical programming.

Artificial Intelligence and Ethics: A Symbiotic Relationship

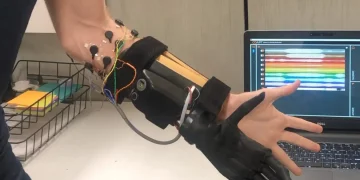

AI plays a crucial role in enabling robots to make ethical decisions. AI systems, especially machine learning algorithms, can process vast amounts of data and recognize patterns that would be impossible for humans to detect. This ability makes AI a powerful tool for helping robots make decisions in real-time.

However, AI itself presents significant ethical concerns. AI systems can perpetuate or even exacerbate existing biases if they are trained on biased data sets. For example, an AI system trained to recognize faces might be less accurate for people with darker skin tones if the training data was predominantly white. Similarly, an AI system that learns from past human behavior might inadvertently reinforce harmful stereotypes.

Furthermore, there are concerns about the lack of transparency in many AI systems, particularly deep learning models. These models often function as “black boxes,” where the rationale behind their decisions is not easily understandable. This raises questions about accountability and fairness. If an AI system makes an unethical decision, who is responsible? The creators of the system, the users of the system, or the machine itself?

The Future of Ethical Robots

As robotics and AI technologies continue to advance, the question of whether robots can be ethical will likely become more pressing. In the future, robots may be capable of making increasingly complex decisions that affect human lives in profound ways. Autonomous vehicles, healthcare robots, and military drones are just a few examples of robots that will need to navigate ethical dilemmas on a daily basis.

In this future, the challenge will not be just about programming ethics into robots, but also about ensuring that the ethical guidelines they follow are inclusive, adaptable, and reflective of a diverse range of human values. The development of these systems will require collaboration between ethicists, technologists, lawmakers, and the public to ensure that robots remain tools for the good of humanity.

At the same time, society must prepare for the possibility that robots may one day surpass human capabilities in some areas. As this happens, the ethical boundaries of robot decision-making will need to evolve, perhaps even challenging our current understanding of morality itself.

Conclusion: Can Robots Be Ethical?

The question of whether robots can be ethical is more than just a technological one; it’s a deeply philosophical inquiry. While robots themselves cannot inherently possess ethics in the way that humans can, they can be programmed to follow ethical guidelines. However, these guidelines are always a reflection of the values and intentions of their creators.

As AI and robotics continue to evolve, the responsibility for ensuring ethical behavior in robots will lie with humans—both in designing these systems and in overseeing their use. We may not be ready to hand over moral responsibility to machines, but the future of ethical robotics will depend on creating systems that are transparent, adaptable, and aligned with human values. In the end, robots may not be ethical on their own, but they can help us strive toward a more ethical world if we design them with care and consideration.