In the bustling world of artificial intelligence, the idea of robot intelligence has captivated minds for decades. Whether in science fiction or cutting-edge technological innovations, robots that think, adapt, and learn have been a long-standing goal. But what truly makes a robot intelligent? Is it their ability to process information? Their capacity to mimic human behavior? Or is there a deeper, more intricate definition at play?

In this article, we will explore the multifaceted nature of robot intelligence, moving beyond conventional perceptions and delving into the core of what makes a robot truly intelligent.

Defining Intelligence in Robots

When we talk about intelligence in robots, we must first address what “intelligence” itself means. The word is often associated with human abilities—like reasoning, problem-solving, learning from experience, and adapting to new situations. However, the concept of intelligence, in the context of machines, isn’t so straightforward. Are robots truly intelligent, or are they simply executing sophisticated algorithms without any understanding or consciousness?

There are two primary schools of thought:

- Narrow Intelligence: This refers to robots that excel in a specific task—say, playing chess, diagnosing diseases, or navigating complex terrains. These robots are highly specialized but lack the flexibility to perform tasks outside their programmed capabilities.

- General Intelligence: In contrast, general intelligence suggests a robot that can perform a wide range of tasks with the ability to reason, learn, and adapt—much like a human. While narrow intelligence is already common (e.g., self-driving cars, chatbots, robotic vacuum cleaners), general intelligence remains a distant ideal.

Key Factors Contributing to Robot Intelligence

To understand robot intelligence more deeply, we must explore the key components that contribute to making a robot “intelligent.” Here are the primary elements that define robotic intelligence:

1. Perception and Sensory Input

The first step in a robot’s intelligent behavior is its ability to perceive its environment. This involves sensory systems that collect data through cameras, microphones, sensors, and other tools. A truly intelligent robot must be able to interpret this sensory data in real-time, understand its environment, and make decisions based on that information.

Example: Self-driving cars rely heavily on perception systems such as LiDAR, cameras, and GPS to sense their surroundings. They need to recognize road signs, detect obstacles, and predict the behavior of pedestrians—all of which require sophisticated perception and analysis.

2. Learning and Adaptation

True intelligence isn’t about simply following predefined instructions; it’s about learning from experience and adapting to new, unexpected situations. This is where machine learning (ML) and deep learning (DL) come into play.

- Machine Learning: This involves training robots on large datasets so they can make predictions or decisions without being explicitly programmed for every scenario.

- Deep Learning: A subset of ML, deep learning utilizes neural networks with multiple layers to process vast amounts of data and perform tasks like image recognition or speech understanding.

A robot that can learn from its environment and adjust its actions accordingly is more adaptable and “intelligent.”

Example: AlphaGo, the AI program that defeated world champion Go players, learned how to play the game by analyzing millions of board positions and game outcomes. It didn’t just memorize moves—it learned strategies, showcasing the robot’s ability to adapt and innovate.

3. Reasoning and Decision-Making

Once a robot has gathered sensory data and learned from previous experiences, it must be able to reason and make decisions. This involves the robot’s ability to evaluate different options, consider potential outcomes, and choose the best course of action.

Decision-making in intelligent robots is often based on algorithms like decision trees, reinforcement learning, or probabilistic reasoning models. These algorithms allow the robot to simulate various scenarios and select actions with the highest probability of success.

Example: In industrial robotics, a robot tasked with sorting packages must reason through its options, deciding which item to grab based on size, weight, and destination, among other factors. An intelligent robot would be able to make these decisions quickly and accurately, adjusting as conditions change.

4. Autonomy and Action

Intelligent robots must not only think but also act autonomously. Autonomy is what distinguishes robots from traditional machines that rely on human input for operation. Autonomous robots can perform tasks with minimal supervision, making decisions in real-time and executing actions without direct human intervention.

However, autonomy in robots doesn’t mean they are free to act recklessly. Instead, autonomy is governed by carefully designed systems that ensure the robot’s actions are aligned with its goals and ethical guidelines.

Example: Robots in warehouses, such as Amazon’s Kiva robots, operate autonomously to move inventory, avoiding obstacles, and coordinating with other robots. These robots can navigate the warehouse efficiently, even when new obstacles are introduced, showcasing both their autonomy and intelligent behavior.

5. Problem-Solving and Creativity

Problem-solving is another hallmark of intelligence. True intelligent robots don’t just follow fixed procedures—they must be capable of tackling novel problems that haven’t been explicitly programmed into them. This involves creativity and out-of-the-box thinking.

A robot that can generate solutions to unforeseen challenges shows a level of intelligence akin to human creativity. This can include tasks like designing new tools, creating new strategies, or even adjusting to entirely new environments.

Example: Robots used in disaster recovery operations, such as those deployed in collapsed buildings, often need to come up with creative solutions to navigate dangerous, unstable environments. These robots must use their sensors, reasoning, and adaptability to find pathways, avoid obstacles, and prioritize actions to maximize human safety and recovery.

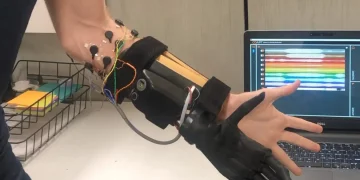

6. Emotional and Social Intelligence

While it may sound like science fiction, emotional and social intelligence is becoming increasingly important for robots, especially those intended to interact with humans. Emotional intelligence involves the ability to recognize, understand, and respond to human emotions in a way that fosters productive interactions.

This aspect of robot intelligence is crucial for applications in healthcare, customer service, and education, where human-robot interaction is frequent. A robot that can understand and respond to emotional cues (e.g., a robot caregiver sensing when a patient is distressed) is more likely to be effective in these settings.

Example: Pepper, a social robot developed by SoftBank, is designed to recognize and respond to human emotions. It uses facial recognition and voice analysis to gauge emotional states, adjusting its behavior accordingly—whether it’s providing comfort, offering assistance, or engaging in conversation.

The Challenges of Achieving True Robot Intelligence

Even with the rapid advancements in AI, robotics, and machine learning, achieving true robot intelligence remains a daunting challenge. Here are some of the primary hurdles:

- Complexity of Human Cognition: Human intelligence is incredibly complex and involves not only reasoning and problem-solving but also emotions, consciousness, and self-awareness. Replicating this complexity in a robot is an immense challenge that is still far from being fully realized.

- Ethical Considerations: As robots become more intelligent and autonomous, questions about ethics and morality arise. How should robots make decisions in situations that involve harm to humans? Who is responsible when a robot makes a mistake? These are important questions that will need to be addressed as robots become more capable.

- Interdisciplinary Challenges: Developing intelligent robots requires expertise across multiple domains, including computer science, neuroscience, engineering, and philosophy. The interdisciplinary nature of the problem means that breakthroughs often come from collaboration across fields, but this also makes progress slower.

- Hardware Limitations: While software and algorithms have made incredible advances, the hardware required to support true intelligence is still lacking. Robotics requires sophisticated actuators, sensors, and processors that can operate in real-time, often under challenging conditions.

Looking Toward the Future

As we look toward the future, the line between human and machine intelligence will continue to blur. We’re witnessing an era where robots are becoming increasingly sophisticated, capable of tasks once thought to be exclusive to humans.

From self-driving cars to advanced medical robots and humanoid assistants, intelligent robots are already making a significant impact. However, achieving a truly autonomous, creative, and emotionally aware robot will require continued advancements in AI, robotics, and our understanding of intelligence itself.

One day, robots may not just be intelligent—they may possess a form of intelligence so advanced that it challenges our very understanding of what it means to be “alive” or “aware.”

Conclusion

What makes a robot truly intelligent is not merely the ability to carry out programmed tasks but the capacity to learn, reason, adapt, and interact in an environment full of uncertainty and change. It’s the integration of sensory input, learning algorithms, decision-making, and autonomy that allows a robot to exhibit behaviors that appear intelligent. As technology advances, robots will continue to evolve, becoming more capable and perhaps even more human-like in their intelligence. However, whether they will ever truly mimic the depth and complexity of human cognition remains to be seen.